ISO 26262 Verification Methods: boundary values and error guessing

In the first part of this blog series, we talked about verification in ISO 26262 through the determination of equivalence classes. At this point, we would like to continue with the second instalment and explain further examples of the methods for defining tests. In addition to the already mentioned equivalence classes, we will discuss boundary values as well as error guessing methods.

Many customers have issues with understanding the various testing methods in the ISO 26262 standard. To say it in a diplomatic manner, ISO 26262 parts 4, 5, and 6 mention e.g. equivalence classes and boundary values as methods for deriving test cases but they do not explain much about these methods in detail. The ISO 26262 standard was derived from IEC 61508, the mother of all safety standards, and in fact the methodologies of these two standards still overlap at some of the topics. More helpful inputs about the equivalence classes, boundary values or error guessing methods can be found in the IEC 61508.

According to IEC 61508-7, boundary values analysis is used e.g. to detect software errors at parameter limits or boundaries. The derived test cases are covering boundaries and extreme classes. Those input classes are previously divided using the generation of equivalence classes method.

The test is checking the boundaries in the input domain. The use of the value zero, in a direct as well as in an indirect translation, is often error-prone and shall receive special attention to the following:

Ultimately, a test should be defined that causes the outputs to exceed the specification boundary values. In other words, the test cases shall force the output to its limited values, through the boundaries at the input with direct impact on the output range, or through evaluation which boundary values at input are needed to cause boundaries at the output.

As part of functional testing, Boundary Value Analysis is based on testing the boundary values of valid and invalid partitions.

E.g. Temperature sensor with valid range of -20°C to 125°C shall be tested:

Calculation of boundary values for:

E.g. for the n variable to be checked, maximum of 4n + 1 test case will be required. Therefore, for n = 1, the maximum test cases are:

4 × 1 + 1 = 5

Invalid boundary values to be tested at min-1 (-21°C) & max-1(126°C)

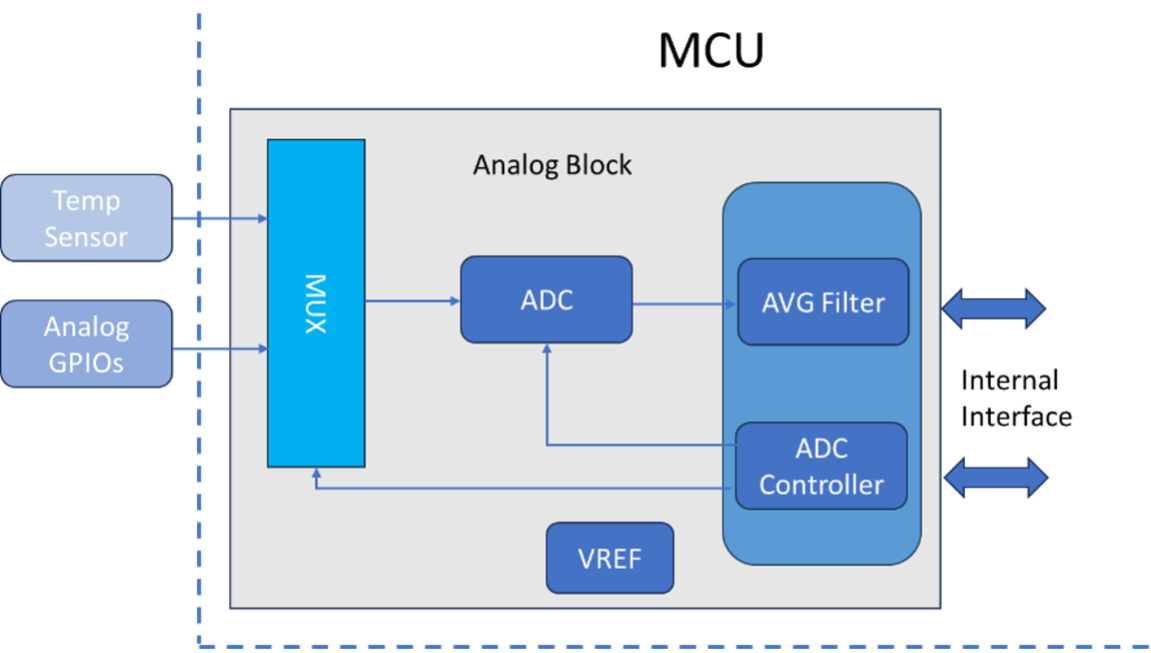

At an MCU, test blocks related to safety mechanisms e.g. temperature sensors & ADC can be tested using fault injection methods. Test cases can be derived using methods like analysis boundary values.

The test cases on hardware detailed design level shall show the compliance with requirements and cover where applicable the test results for the integration level.

Error guessing is a testing technique where experienced testers use their intuition and knowledge about the system or similar designs to evaluate some uncategorized test cases and use them for the verification. The aim is to predict areas where defects are likely to occur. This technique relies on tester’s knowledge of common or typical failures that might arise in similar systems which leads to structured test cases.

“Error guessing tests” can be based on data collected through a lessons-learned process or expert judgment or both. It can be supported e.g. by a FMEA.

Tester tries identifying common failures that have occurred in the past, such as boundary values issues, and then defines specific test cases to target these potential issues. Analyzing defects found in similar projects can help predicting similar issues in the current system aka lessons learned. Based on the experience and knowledge, the testers can explore the design of the system using error guessing methods to test various inputs and interactions that might lead to errors.

The error guessing can lead to further methods of defining and application of test cases:

Error guessing method can help to identify potential errors at specific items of the system very quickly, using the tester’s knowledge and experience. It is a very flexible method that allows to adapt and respond to findings in short time. It is a valuable technique for identifying defects that might not be covered by other testing methods, leveraging the unique insights and experience of skilled testers to enhance the overall testing process.

Dijaz Maric, QM & Reliability Engineering Consultant

Do you want to learn more about the implementation of ISO 26262, IATF 16949, or other standard in the Automotive sector? We provide remote support and training to enhance your functional safety related projects. Please contact us at info@lorit-consultancy.com for bespoke consultancy or join one of our upcoming online courses.

Learn moreThis method can also be applied on hardware design level.

Power supply errors:

Stress impact:

Startup and shutdown sequences:

Hardware-Software interaction:

Above examples show how error guessing can be a useful technique in hardware testing allowing testers to leverage their experience and intuition to uncover potential errors and ensure robust and reliable hardware systems.

Error guessing relies on lessons-learned and experience of the team. Semi-conductor producers often prepare failure/ error catalogues for different blocks (digital/ analogue) or different components and functions.

At the end of this blog series, I would like to revisit the concept of equivalence classes and show how we could reduce the testing effort for the safety mechanism (SM) verification.

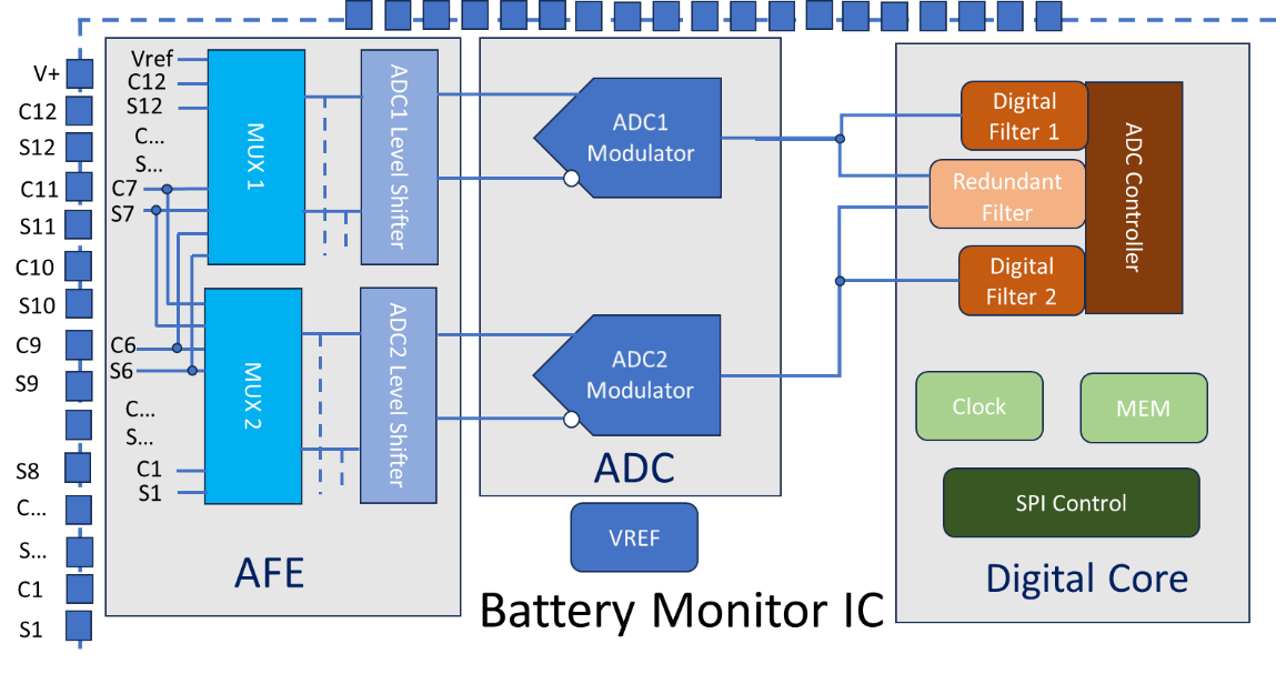

In the image below we see a multi-channel battery monitor IC, which, among other things, is responsible for monitoring cell voltages. To reduce the testing effort for individual safety mechanisms we try to generate so-called equivalence classes and use one test to verify multiple SMs. Let’s assume the following SMs are present:

We can define a set of tests that verify all three mentioned safety mechanisms. For example, when we test SM1, we can also verify SM2.

Equivalence classes, boundary values, error guessing, all those methods support you when defining your test cases for the verification process in ISO 26262. Which of them are useful, depends primarily on the risk analysis and the evaluated ASIL (automotive safety integrity level) of the item/ system in question.

I hope this blog helps you to better understand the methods discussed. If you want to learn more about the topic, feel free to contact us or book one of our courses on functional safety.

By Dijaz Maric, Quality Management & Reliability Engineering Consultant

If you are looking for functional safety support either through consultancy or training courses, please feel free to contact us via contact form or at info@lorit-consultancy.com.